Writing an emulator: timing is key

We are so close! We now have scrolling pixels and sound. The pixels are scrolling a bit too fast and the sound is still kind of subpar. I won’t be trying to improve sound, but there should be something we can do about that speed issue.

The final issue

It was five articles and almost two years ago, but someone wrote:

We’ll consider that the CPU’s only state is… to execute an instruction, exactly as it’s been doing so far. We’re basically converting the body of the CPU’s execution loop into a

Tick()method.

They also mentioned at the end of that same article:

Right now this means that our CPU, with its unique state that will advance one step for each system clock tick, is running noticeably faster than our more accurate PPU. It just means it’ll be spending more cycles waiting for that frame and I can live with that for the time being.

Right! That time is long past, and so before I can conclude this series of articles, we’re going to have to look at timings again, because my last statement was clearly wrong: the CPU running too fast is visibly impacting the whole emulator.

Back in time

Maybe I should have tackled that issue right at the beginning, but back then I was very eager to get to a point where I would have something to show instead of just backtraces in a terminal. CPU timings aren’t the sexiest aspect to writing an emulator, yet they are key to it running somewhat normally.

I feel that I wrote enough about the Game Boy CPU in the first couple of articles that they should be enough of a refresher. In a nutshell, our CPU repeatedly:

- Reads an opcode from memory.

- Reads the opcode’s parameters from memory (if any).

- Updates its internal state depending on that opcode and its parameters (if any).

- Writes a result to memory (if needed).

I’ll refer to these things further on as “micro-operations” or just “operations”1, which will be used as building blocks for all our CPU instructions. Apart from reading opcodes, all operations are optional (the best example being the NOP instruction, which does nothing and just incurs a single read from memory for the opcode itself).

This means that different CPU instructions take a different amount of time depending on how many operations they require. This depends on:

- How long the opcode for that CPU instruction is (1 or 2 bytes in case of extended instructions).

- Whether the instruction involves reading from or writing to RAM.

- Some internal behavior mentioned in documentation that I’m not sure how to explain (fortunately, this is rare).

I briefly mentioned that the Game Boy’s RAM was slower than the CPU, and that any operation involving RAM would normally take several CPU ticks to complete.

The reality is somewhat more complex. If you would like more details, I encourage you to go check how coffee-gb did it, by implementing a system even closer to how a CPU works internally.

I did something a bit similar. For the vast majority of instructions, we can simply look at how many memory accesses are needed. Each byte read from or written to RAM should take 4 CPU ticks. Don’t just take my word for it, let’s look at the instruction table we used at the very beginning (or, if you like their format better, the list of instructions on Pan Docs).

Regardless, you will see, for each instruction, a duration in machine cycles (what I’ve been calling ticks all along).

We haven’t paid any attention to that number before, but it’s now the one thing we want to implement.

Take all the time you need

You’d think we could very simply store the number of ticks a CPU instruction needs to complete somewhere, and wait that number of ticks whenever we execute the corresponding instruction. It might even work well enough for our example program, but some conditional instructions take a various amount of cycles (JR NZ,r8, for instance, takes less time if the condition is false because it doesn’t need an operation to update PC for the jump) and we can actually do much better with little more effort.

What if we defined a CPU instruction as a list of consecutive micro-operations? It would translate into code as a list of functions that would each update the CPU state for each operation, spending the appropriate amount of ticks on each. Going back to the list of things I said a CPU could do, let’s see what basic operations we truly need to handle, and how long they take. We have:

- Reading a byte from memory. This takes 4 ticks.

- Writing a byte to memory. This also takes 4 ticks.

- Updating the CPU’s internal state. This can be considered instantaneous, it can happen at the end of a memory access at no extra cost.

Wait, is that it?

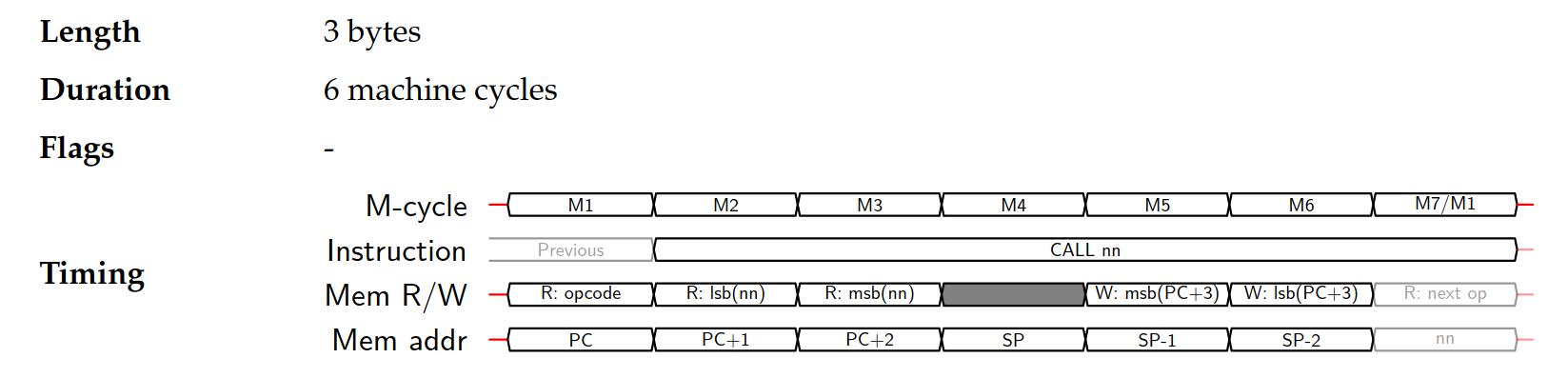

By and large, yes! We can even compare that with the list of CPU instructions and see right away how the ones that need no specific memory access only take the time needed to read the opcode itself, for instance. There is also deeper, more technical documentation, though it’s not always complete. Most notably, the fascinating Cycle-Accurate Game Boy Docs and Gekkio’s amazing Complete Technical Reference. I’ve used the latter a lot as reference, I really love how it illustrates CPU instructions down to the cycle:

CALL from Gekkio’s Complete Technical Reference, notice the empty operation right before pushing PC to the stack?Okay, so that means we’ll need to rewrite some of the functions emulating our CPU instructions to split them into successive operations.

Didn’t we have a FIFO somewhere?

One easy way to enqueue operations and then execute them separately in the right order would be to reuse that FIFO structure we made to shift pixels out of the PPU. Its size is fixed to 16 items, but it’s more than enough to hold all the operations we need for any CPU instruction2.

This means we can split the CPU’s Tick() method into two states: one where the CPU is just reading the opcode, and one where it will be executing each of the operations needed (if any). We should start with that.

func (c *CPU) Tick() {

// Any CPU operation takes 4 ticks.

c.ticks++

if c.ticks < 4 {

return

}

c.ticks = 0

switch c.state {

case FetchOpcode:

// The next opcode to execute is the byte at the exact address

// pointed to by PC.

opcode := c.mmu.Read(c.PC)

c.PC++

// Choose in which instruction set (base or extended) we'll

// look up the opcode.

if opcode == 0xcb {

// Extended instruction set, opcode is one more byte, don't update

// current state and keep fetching.

c.extendedSet = true

return

}

// Try finding a corresponding instruction in the instructions

// mapping. Keys that don't have a value will return 'nil'.

var instruction func(*CPU)

if c.extendedSet {

instruction = extendedInstructions[opcode]

} else {

instruction = instructions[opcode]

}

// By this point we got the instruction we want, reset the extended set

// bit for the next instruction.

c.extendedSet = false

instruction(c) // Execute instruction.

}

}

(As you can see, there is also a new state property in our CPU with associated values, which work exactly like they do in our PPU.)

So far, we only added a 4-tick delay for each state, similar to what we did for the Pixel Fetcher a few articles ago, and enclosed the older code in a switch/case statement. Reading an opcode byte now takes 4 ticks per byte (so extended instructions take twice as long). We still execute an instruction in a single step, but let’s see if that 4-tick delay already makes a difference.

I can see and hear a tiny improvement, so let’s move on to the next part: instead of executing the instruction as a single function, we’ll have that function enqueue the operations it still needs to complete, and then run one of these operations every 4 ticks until we run out. Then, we’ll go back to reading the opcode for the next instruction.

The updated state machine for the CPU looks like this:

func (c *CPU) Tick() {

// Any CPU operation takes 4 ticks.

c.ticks++

if c.ticks < 4 {

return

}

c.ticks = 0

switch c.state {

case FetchOpcode:

// The next opcode to execute is the byte at the exact address

// pointed to by PC.

opcode := c.mmu.Read(c.PC)

c.PC++

// Choose in which instruction set (base or extended) we'll

// look up the opcode.

if opcode == 0xcb && !c.extendedSet {

// Extended instruction set, opcode is one more byte, don't update

// current state and keep fetching.

c.extendedSet = true

return

}

// Try finding a corresponding instruction in the instructions

// mapping. Keys that don't have a value will return 'nil'.

var instruction func(*CPU)

if c.extendedSet {

instruction = extendedInstructions[opcode]

} else {

instruction = instructions[opcode]

}

// By this point we got the instruction we want, reset the extended set

// bit for the next instruction.

c.extendedSet = false

// Create as many micro-operations as needed to complete this CPU

// instruction in the proper number of cycles. Note that some

// instructions complete "instantaneously" (i.e. within the 4 cycles it

// takes to fetch and read the opcode). In that case, we just keep

// fetching opcodes.

instruction(c)

// If we have more micro-operations to perform, do that in a separate

// state.

if c.ops.Size() > 0 {

c.state = Execute

}

case Execute:

opItem, _ := c.ops.Pop()

op := opItem.(func(c *CPU)) // Convert FIFO generic item into proper type

op(c) // Perform operation

// When we run out of operations, go back to fetching opcodes.

if c.ops.Size() == 0 {

c.state = FetchOpcode

}

}

}

Here we added an Execute state where we check a new property of our CPU: ops, which is how I named the micro-operations FIFO I added to the structure. Then, if there are any operations in it, we’ll go to that Execute state and perform one of these operations every 4 ticks.

What are we enqueueing3 though? So far, CPU instructions are simple functions taking a CPU pointer to update it (if needed). Well, let’s work with that, then! Our FIFO doesn’t care what we put in it as long as we can tell. Let’s fill it with individual operations that are just functions taking a CPU pointer, just like our instructions were implemented before.

This has one immediate advantage: all instructions that already execute within 4 ticks don’t even need any change!

As for the others, we’re going to have to split their body into the proper number of operations, but considering there are only three cases to handle, it’s not going to be that hard.

Specific examples

There are currently 45 CPU instructions implemented in our example program, and yes, I converted all of them to use our micro-ops FIFO, but it was rather fast, honestly. I’ll just list a few examples based on the kinds of instructions we have.

Immediate instructions

Those are instructions completing within the time it takes to read their opcode (remember, we’re always going to need at the very least 4 ticks for any instruction). If you look at your opcode list of choice, that’s all the instructions marked as taking 4 ticks in the basic set, and all those taking 8 ticks in the extended set.

None of these instructions (and it’s more than a third of them all) will need any change. We’ll still call the related functions from the CPU’s Tick() method in the FetchOpcode state and directly go to reading the next opcode.

I’m just putting one next as an example for consistency with the following sections4.

// XOR A (4 ticks)

func xora(c *CPU) {

c.A ^= c.A

}

That XOR A (which just sets A to zero) is supposed to complete within 4 ticks, which have already elapsed by the time we have read the corresponding opcode and figured the instruction was XOR A. So we just do it and return, the CPU’s operations FIFO is still empty and it will move on to read the next instruction.

Instructions reading from memory

For that matter, the next instruction is LD HL,$9fff which reads a 2-byte parameter from memory and stores its value in the HL register. Hence, it should take 12 ticks: the 4 ticks it took to read the opcode, then 4 ticks for each byte read from memory, 0x9f and 0xff (which will actually be in the reverse order because endianness).

The original function we wrote looked like this:

// LD HL,d16

func ldhld16(c *CPU) {

// Read L first because little-endian.

c.L = c.mmu.Read(c.PC)

c.H = c.mmu.Read(c.PC + 1)

c.PC += 2

}

It’s already pretty clearly defined: we read one byte, assign it to a register. We read a second byte, assign it to another register. Then we increment the Program Counter by 2 all at once, but in reality we should have just incremented it by one after each call to Read(). This is exactly how we’re going to do it with the new micro-operations FIFO:

// LD HL,d16 (12 ticks)

func ldhld16(c *CPU) {

// Read the first byte into the lower register.

c.ops.Push(func(c *CPU) {

c.L = c.mmu.Read(c.PC)

c.PC++

})

// Read the second byte into the higher register.

c.ops.Push(func(c *CPU) {

c.H = c.mmu.Read(c.PC)

c.PC++

})

}

The first thing you should notice is that this function no longer has any direct effect on the CPU: when ldhld16 returns, the CPU’s registers will be exactly as they were when we called that function. However, we now have two operations queued up in the CPU’s FIFO, each of which will update the CPU in a different way but with an identical result in the end. We will just take longer to perform that operation: 2×4 ticks. Adding the time it took to read the opcode, this adds up to 12 ticks as stated in the documentation, and should now be more accurate than our previous implementation was.

The function for loading a value into a single register, such as LD A,$80, is very similar. We just push one operation to the CPU’s FIFO and this only adds up to 8 ticks, as specified.

Instructions writing to memory

In fact, this can also be said for any instruction storing the value of a single register into a known memory address (as opposed to an address given in parameter, which will need more ticks to be read from memory first).

For instance, LD (HL),A will be written like this:

// LD (HL),A (8 ticks)

func ldhla(c *CPU) {

c.ops.Push(func(c *CPU) {

c.mmu.Write(c.HL(), reg)

})

}

Single memory write, single operation. Pretty easy so far! Most of the instructions we implemented are like this one.

But then, there are some more complex instructions doing it all at once: read, write, update, and… more?

Complex instructions

CALL d16 is such an instruction. It is the largest of the instructions we have implemented, and the only one taking 24 ticks to execute5. It’s got it all: reading the address to jump to from memory (8 ticks for the two bytes), then writing the current value of PC to the stack in memory (also 8 ticks for the two bytes) and then… updating PC, which I said earlier should be instantaneous, so why doesn’t this instruction only take 20 ticks?

It’s the push to the stack that, for some reason, takes 4 ticks more than it should, and to be honest I’m not sure why. Pushing a 16-bit value to memory should only take 8 ticks, but in that specific case, it takes 126. I didn’t find a clear answer in any of the documentations I mentioned earlier, but they do mention that pushing to the stack takes 4 operations: reading the opcode, writing to SP and decrementing it, writing to SP and decrementing it… and an extra 4 ticks.

I trust these sources, so I just rolled with it. If you do know more, I’m curious! To be fair, that won’t make much difference to our example program, but if you do want to go further, this is the kind of little detail you’ll be dealing with a lot.

In the end, this is what the code for this instruction will look like:

// CALL d16 (24 ticks)

func call(c *CPU) {

// We need PC to read the two address bytes following the opcode. This is

// where we need two temporary registers.

// Read the first (low) address byte (4 ticks).

c.ops.Push(func(c *CPU) {

c.tmp1 = c.mmu.Read(c.PC)

c.PC++

})

// Read the second (high) address byte (4 ticks).

c.ops.Push(func(c *CPU) {

c.tmp2 = c.mmu.Read(c.PC)

c.PC++

})

// Push current PC address (the next instruction to execute) to the stack.

// For some reason that push operation incurs an extra 4-tick delay.

c.ops.Push(func(c *CPU) {

// Do nothing, the CPU will just wait an extra 4 ticks.

})

// Push high byte to stack.

c.ops.Push(func(c *CPU) {

c.SP--

c.mmu.Write(c.SP, uint8(c.PC>>8))

})

// Push low byte to stack and update PC in the same operation.

c.ops.Push(func(c *CPU) {

c.SP--

c.mmu.Write(c.SP, uint8(c.PC&0xff))

c.PC = uint16(c.tmp2)<<8 | uint16(c.tmp1)

})

}

You can see a mix of all we’ve described before: two reads from memory, two writes to memory, and that extra empty operation. I also tacked the update to the PC register right at the end of the last operation, because I didn’t feel like writing specific code to differentiate queued operations taking four ticks and instantaneous ones.

Also, temporary registers are mentioned. These are now needed, because where we used to update PC in a single assignment, we now need it to read each byte from memory in two distinct operations, hence we can’t overwrite PC until we have read the full address to jump to. We can’t store that in a local variable either since each of these operation functions will run independently from the others. The CPU, being the one persistent object here, is then used to store those temporary bytes until we compute a 16-bit value to put in PC.

It may not be as much as a hack as I felt it was at first, as I have since then found Z80 documentation mentioning temporary registers W and Z that seem to be used for that exact purpose.

The final result

Is that it? Have we reached a result that we could comfortably call the full execution of the Game Boy’s boot ROM? I mean, sure, the sound part is somewhat lacking, but we still have some, and scrolling pixels. And now, hopefully, the whole thing is running at roughly the speed of a real Game Boy.

Let’s see what happens now when we attempt to run a game…

All right, I’m calling it. We’re done!

It’s been a lot more work than I thought it would be (again, sound is complicated) but we got there. We have written code to boot a Game Boy from scratch. The final version of our example program is just shy of 2000 lines of code — less than 1400 if you strip out the comments. Not bad for what is now essentially an emulator, though it’s still missing quite a few features.

Where to go from there?

From this point on, the best thing to do would be implementing the missing CPU instructions and try to run an actual Game Boy game. The example above just crashed right after boot since it tried executing instructions outside the set we implemented.

If you do feel like going further, all the references I listed in these articles should prove useful, though there are many more. And then, well, the best test is to try running a simple game. Tetris comes to mind of course, but some others like Dr. Mario or Bombjack are even simpler, also fit in 32KB and might run better on an incomplete emulator.

For that matter, I’d also advise implementing buttons and joypad inputs early on, because our current implementation behaves as if all buttons were pressed all the time7, which soft-resets a lot of games, giving the impression they’re not running at all.

If you’d like to see more quirky implementation details, I plan on writing further, much shorter articles just to show off some of the funny bugs I encountered while developing that example emulator or my own code.

At last, and again…

Thank you very much for reading! I had fun writing about this project, I hope it proved useful or at least mildly interesting to someone.

References

- coffee-gb (CPU operations implementation)

- Gameboy (LR35902) OPCODES

- Pan Docs: CPU Instruction Set

- The Cycle-Accurate Game Boy Docs

- Game Boy: Complete Technical Reference

- Z80 design (Wikipedia)

- Z80 temporary registers W and Z

- Awesome Game Boy Development – Massive Collection of Resources

- Example program: timing is key

- Example ROM

You can download the example program above and run it anywhere from the command line:

$ go run timing-is-key.go

It expects a dmg-rom.bin file to be present in the same folder. Note that it might take a little while the first time you run the program as it will need to build the SDL libraries.

You can also download the same example ROM from last time and run it from the command line:

$ go run timing-is-key.go cartridge.gb

At last, you can substitute cartridge.gb with the path to any GB ROM you have and see what happens!

-

You might also see these referred to as “ops” or “micro-ops” in code, as well as in other sources. ↩︎

-

In reality we’ll never have more than five at a time. But if for some reason you accidentally reduce that to, say, three, code breaks in really interesting ways! I’ll show you next time! ↩︎

-

I’m so sorry. I can’t see that word and not think about that comic, and now you can’t either. ↩︎

-

I’m using helper functions in the actual program, to reduce duplicated code, but for the sake of clarity I’ll ignore those in the code quoted further below. ↩︎

-

That’s including the 4 ticks to read the opcode, so we still only need a FIFO big enough to hold the 5 remaining operations. ↩︎

-

You can see it in the list of CPU instructions:

PUSH AF,PUSH BC,PUSH DEandPUSH HLare all marked as taking 16 cycles, including the initial opcode read, where I would have expected them all to only take 12. You’ll note thatPOPinstructions don’t seem to have that issue and are listed as taking 12 cycles. ↩︎ -

Long story short: all registers used for button presses are set to zero by default in our program, and the Game Boy’s inputs work in inverse logic. ↩︎